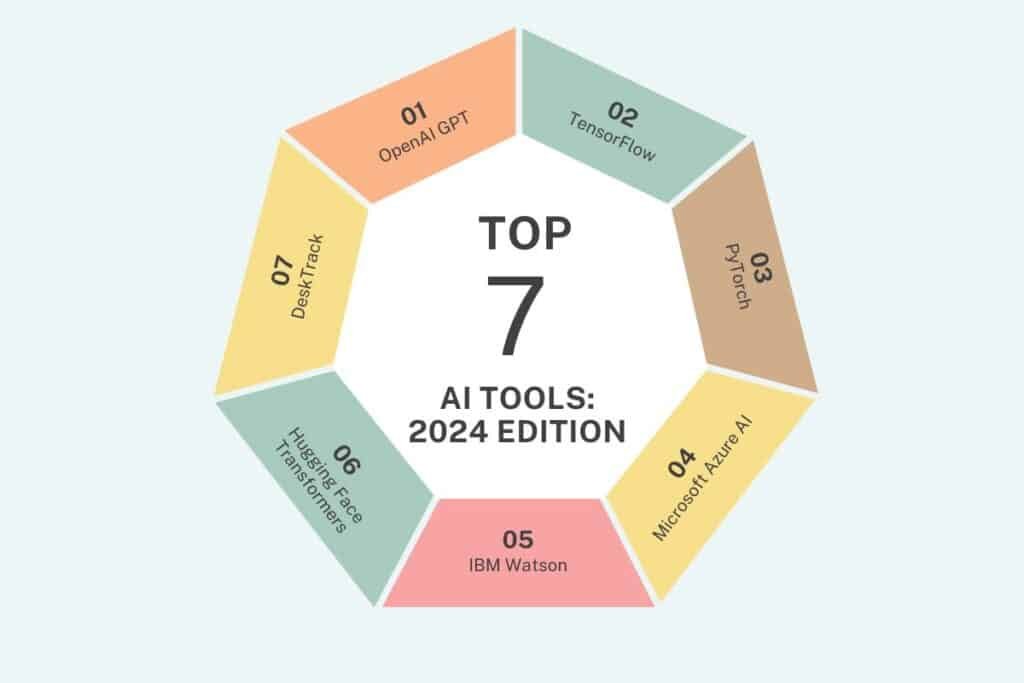

In the rapidly evolving field of artificial intelligence, businesses and individuals must continue to lead the way. As we push towards 2024, the field of AI is expanding with sophisticated tools that allow users to develop programs with ease, automation and efficiency like some never arrived. In this guest post, we will explore the top seven AI tools for 2024, all of which offer a unique ability to transform businesses and drive unprecedented growth.

1. OpenAI GPT (Generative Pre-trained Transformer):

OpenAI GPT is a high-level language model known for its natural language processing capabilities. Word Generation From language translation to contextual understanding, GPT has demonstrated incredible power in various applications that enable companies to streamline communication, enhance customer experience, and unlock actionable insights from infinite text data stores.

Key features of AI Tools (OpenAI GPT):

a.) Pre-trained Model:

The off-the-shelf GPT can learn through large quantities of text information that it gets from the Internet, making it possible to produce natural sentences which are similar to human ones.

b.) Transformer Architecture:

GPT is based on a transformer architecture which is known for its ability to efficiently process sequential data referring to natural language. Unlike neural networks, GPT can be trained to take into account very long range dependencies in the text and produce coherent and appropriate replies in a given context.

c.) Scalability:

The GPT model to a large extent is highly scalable, can manage enormous datasets and text in the magnitude of short sentences ranging to lengthy paragraphs or articles.

d.) Adaptability:

Limited tweaking enables GPT to be tailored for the stability of many natural language processes, such as text generation, completion, translation, summarization, sentiment analysis, and so on.

2. TensorFlow:

Built by Google, TensorFlow stands as a cornerstone in the AI toolkit, providing a complete platform for building and deploying machine learning models at scale Through an extensive library of pre-built modules and ecosystems because of its depth, TensorFlow lets developers manage complex tasks spanning from image recognition to predictive analytics with unmatched efficiency and flexibility.

Key features of TensorFlow:

a.) Flexible Architecture:

The tensorFlow modular architecture provides an opportunity for users to create ML/DL models by the use of high-level APIs (e.g. Keras) for quick prototyping or low-level APIs that permit greater flexibility and control over model architecture and training process.

b.) Scalability:

TensorFlow provides various distributed computing options that allow users to efficiently train models on multiple GPU machines or across computers in a cluster. TensorFlow’s scalability makes it practical for a data-rich environment and inter-operable with computationally intensive tasks.

c.) Extensive Ecosystem:

TensorFlow provides a huge arms library and set of tools that are in tandem with its core modules. Pretty much like this are such TensorFlow Hub to be used for exchanging models and to discover models and such of TensorFlow Lite for deploying them on mobile and embedded devices and such of TFX for design of end-to-end ML pipelines.

d.) TensorFlow Serving:

TensorFlow serves specifically as the preferred choice for production in a commercial environment. It offers a scalable and efficient infrastructure for HTTP testing over HTTP, which makes drawing up TensorFlow models on web and mobile apps pretty easy.

3. PyTorch:

Known for its powerful computational charts and intuitive interface, PyTorch turns good systems for deep learning devotees and analysts Backed by Facebook AI, PyTorch engages customers to try cutting-edge calculations, model models quickly, use progressive methods like neural organize pruning to prune AI limits innovation and AI supports learning to limit innovation.

Key features of PyTorch:

a.) Easy to Learn and Use:

The slightly simple and intuitive API of PyTorch is a beautiful instrument that allows newcomers in deep learning to start in no time. The syntax of UnrealScript has much resemblance with the regular Python programming and this keeps it low for anyone familiar with Python.

b.) Pythonic Design:

PyTorch is implemented in the “torch.py” module, which provides an easy-to-read and clear interface to Python code. This design strategy helps developers to quickly grasp the logic and extend the library to cover any other needed scenarios.

c.) Tight Integration with Python Ecosystem:

The Python scientific computing environment integrates with PyTorch seamlessly as it supplies main computation libraries such as NumPy, SciPy, and pandas. This interoperability makes it possible to take advantage of the extant tools and libraries to pre-process data, to visualize the results output, and to perform other acts alongside PyTorch.

d.) Dynamic Computation Graphs:

Another advantage of PyTorch is that it allows both dynamic computational graphs and TorchScript through its static computation graphs support feature. Therefore, the developers have the power to decide on the relevant graph presentation which is by their requirements and style of work.

4. Microsoft Azure AI:

As businesses slowly embrace cloud-based systems, Microsoft Sky Blue AI stands out as a comprehensive suite of tools and governance meticulously designed to improve AI and move it from cognitive labour to vision and discourse if accepted into the Sky Blue Machine Learning Studio for predictive modelling, Microsoft announcements enable AI capabilities to be routinely integrated into existing business processes and empower organizations to easily stimulate optimal business outcomes.

A key feature of Microsoft Azure AI:

a.) Scalability and Performance:

Azure AI is built on the Azure platform that is based on the cloud computing platform of Microsoft Scale Azure. This way the users can guarantee the availability, performance, and scalability of AI workloads. Customers have access to AI services that scale to whatever level of demand by using the Azure massive global infrastructure of data centres that have low-latency access to AI capabilities.

b.) AI DevOps:

Azure AI presents AI DevOps functions that ensure smooth machine learning pipeline flow with all stages from modeling development to testing and deployment being considered. Azure DevOps components that comprise the Integration of Azure ML pipelines with Azure services like Azure Pipelines and Azure Boards can be used for automated testing, continuous integration and deployment, and versioning of models.

c.) Enterprise-grade Security and Compliance:

Among other security and compliance considerations, Azure AI ensures the encryption of AI data and applications to be kept safe in enterprise-grade settings. Azure Cloud’s services are compliant and certified with the industry’s best practices of data protection and privacy regulations such as the General Data Protection Regulation (GDPR), the Health Insurance Portability Accountability Act (HIPAA), and ISO standards keys for AI solutions to meet the highest data protection and privacy standards.

d.) Hybrid and Multi-cloud Deployment:

Azure AI is an enabler of hybrid and multi-cloud deployment scenarios. Users’ ability to use AI workloads on-premise, in the cloud, or across multiple cloud environments becomes a reality. Azure Arc extends Azure management and regulation of multiple cloudy and hybrid environments with AI deployment and management that is highly consistent across all the infrastructure types.

5. IBM Watson:

With cognitive computing capabilities, IBM Watson plans to be a game-changer in the AI landscape, driving efforts to process data-driven experiences and use devices From common speech understanding to image analysis and predictive modelling, Watson’s suite of AI machines It also enables you to report automated meetings on the side.

Key Features of IBM Watson:

a.) Natural Language Understanding (NLU):

IBM Watson employs a sophisticated natural language processing feature to find text data with low structure. NLP capabilities are made up of entity recognition, sentiment analysis, language translation, and content categorization; these allow users to extract insights when scaling up batches of texts.

b.) Conversation AI:

IBM Watson creates a platform for a conversation-based AI which helps organizations build chatbots, virtual agents, and virtual assistants for customer service and also for support and engagement.

c.) Visual Recognition:

The IBM Watson Visual Recognition tool is to analyze and sense the content of visuals, such as pictures and movies, leveraging deep learning technology. Having the ability to name objects, sceneries, faces and text within images reduces the need for visual data labelling, thus making tasks like image classification, object detection, and content moderation more straightforward.

d.) Machine Learning Services:

IBM Watson helps data scientists and developers to develop custom machine learning models as well as machine learning services that are built around machine learning. These products include IBM Watson Studio, a design studio for the development and production of machine learning, and IBM Watson Machine Learning, an operating system for scaling machine learning in production environments.

6. Hugging Face Transformers:

Integrating the Ambressing Conventions Readers have previously studied modder books for naming the general understanding of language and the time of when it appeared, or spoken language of content language, or the embracing conventions of Ambressing Among the assemblies released, the higher cycle is raised and the NLP -T continues.

Key Features of Hugging Face Transformers:

a.) State-of-the-Art NLP Models:

Hugging Face Transformer offers easily tailored state-of-the-art models for Natural Language Processing (NLP), both Transformer based models like BERT, GPT, RoBERTa and T5.

b.) Pre-trained Model Hub:

Hugging Face Transformer Model Zoo serves as a one-stop centre where users can find, take and download already-trained models used to perform NLP tasks. The hub model racks expose an enormous array of model provisions brought by the community for users with particular needs and aspirations.

c.) Easy Model Deployment:

The Hugging Face Transformers offers a simple way to integrate, tweak and utilize models through libraries and APIs for production applications. The developer community has a useful opportunity to use Hugging Face’s libraries in well-used coding languages like Python and just a few lines of code is needed to incorporate pre-trained models into their software applications.

d.) Model Interoperability:

Integrated with the Hugging Face Transformers is the switchability and interoperability between the varying transformer-based models, permitting the users to visit any of the classes and try out different architectures dedicated to the given NLP task.

7. DeskTrack – Employee Monitoring Software:

Desktrack is an employee monitoring software outlined to offer assistance to organizations in tracking and overseeing the efficiency of their workforce. It offers a few key highlights custom-fitted to meet the needs of present-day working environments.

Key Features of DeskTrack:

a.) Time Tracking:

Desktrack permits bosses to screen the time worked by workers on different assignments and ventures all through the workday. This makes a difference in analyzing efficiency levels and recognizing ranges for improvement.

b.) Application and Site Checking::

It empowers managers to track which applications and websites representatives are utilizing during work hours. This makes a difference in distinguishing potential diversions and guaranteeing that workers remain centred on their tasks.

c.) Screenshots:

Desktrack captures screenshots at normal intervals to give visual proof of worker action. This includes guarantees straightforwardness and responsibility in further work environments.

d.) Activity Level Monitoring:

By following console and mouse action, Desktrack gives bits of knowledge into representative engagement levels. Bosses can distinguish sit out of gear time and empower proactive support.

Conclusion:

Our journey into AI in 2024 now takes stock of these major tools which represent, although at the pinnacle the outstanding piece of innovation and the excellence the markets have to offer to enable businesses and individuals to harness AI capacity in the best way possible.

Whether it’s through the use of artificial intelligence or natural language processing, computer vision or deep learning, these tools are the foundations of such change and they help to determine the future of the industries, shaping the industries and driving unprecedented rates of growth in the years to come. AI changes entrepreneurial activities dramatically. The future of AI is filled with innovative tools thereby giving you a reason to embark on this fantastic journey.